From - Shorit

Edited by - Amal Udawatta

Explore the fascinating concept of the human eye's resolution, estimated at around 576 megapixels, and understand its unique, dynamic visual capabilities.

Learn | By Leonardo Cavazzana

The resolution of the human eye is often compared to digital cameras and is estimated to be around 576 megapixels.

However, this comparison is not straightforward due to the eye’s complex and dynamic nature.

Unlike a sensor, the eye has a non-uniform resolution, with the highest density of receptors in the central area called the fovea.

This means the eye perceives details with high resolution only in the center of its field of view, while peripheral vision is much less detailed.

So, what is the resolution of the human eye? Let’s take a closer look at resolution, megapixels and how it all relates to the human eye.

What is Resolution and How Is it Measured?

Resolution is a term that can have different meanings when used in different fields of technology. But let’s talk about our field.

Resolution is a measure of the number of pixels – picture elements or individual dots of color – that can be present on a digital camera sensor or display screen.

In other words, resolution describes the sharpness or clarity of an image or photograph. It is measured by the number of pixels that can be displayed horizontally and vertically.

Resolution is an important factor in measuring the visual quality of digital images, photos and videos.

A higher-resolution image means that it contains more pixels, which means it can display more visual information.

This means that a high-resolution image is sharper and clearer than a low-resolution one.

The resolution of modern cameras is measured using its pixels: pixels per inch (PPI). For printing, picture resolution is measured by dots per inch (DPI).

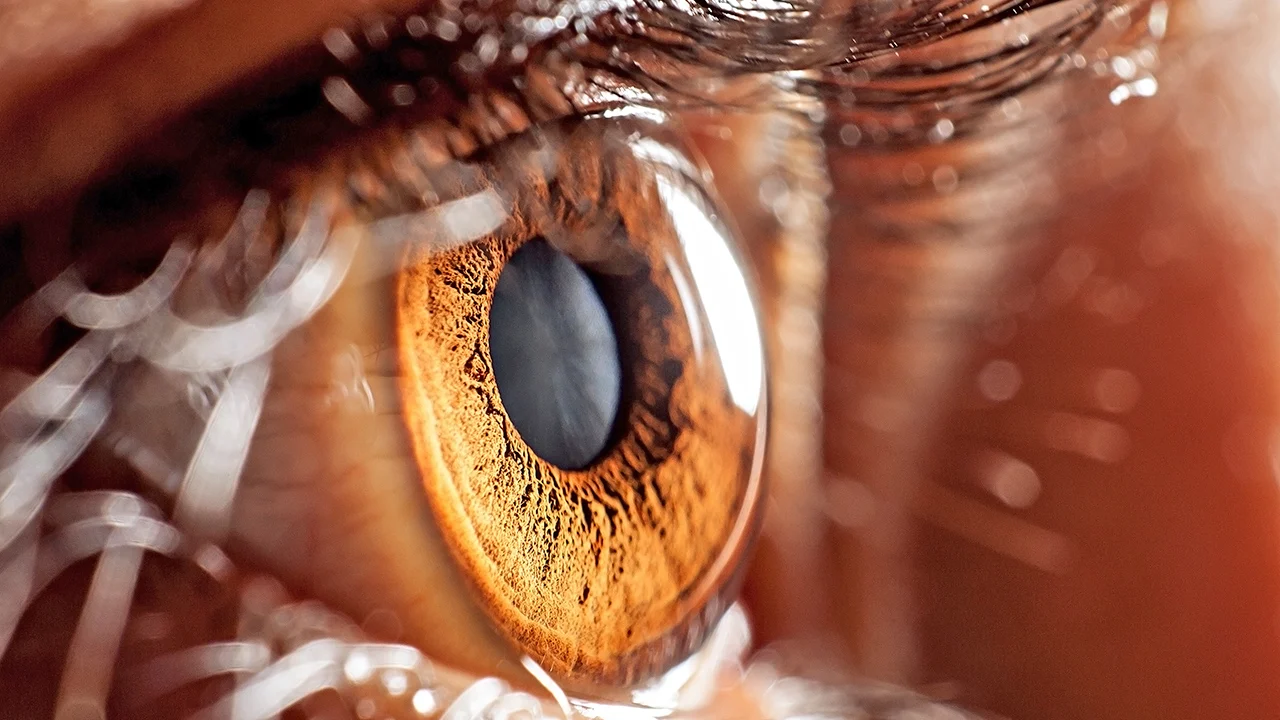

The Resolution of the Human Eye

Image: Shutterstock

When we talk about the resolution of human vision, the subject gets a little more complicated, as it’s affected by various circumstances.

When people talk about the resolution of the human eyes, they usually refer to visual acuity or the ability to detect tiny features in an image.

So, what is the resolution of our eyes?

According to the latest research by scientist and photographer Roger M. N Clark, the resolution of the human eye would be 576 megapixels. This equates to 576,000,000 individual pixels, so we can see much more than an 8K TV can.

The eye is not a single-frame snapshot camera. It’s more like a video. Basically, images are created by our rapidly moving eyes, taking in a ton of information.

Our eyes don’t capture all the visual information equally. Since we have two eyes, our brain combines the signals to increase the resolution even further.

Normally, we move our eyes around the scene to gather more information.

Because of these factors, the eye and brain achieve the highest resolution possible with the number of photoreceptors in the retina.

Therefore, the equivalent numbers in megapixels above refer to the spatial detail in an image that would be needed to demonstrate what the human eye is capable of.

However, in order to measure the resolution of the human eyes, an important aspect is needed: the fovea. The fovea is a small but fundamental part of the human eye that plays an essential role in our ability to see fine details and perceive colors.

People only process information in the fovea range, which is why science believes that we only see around 7 megapixels in our foveal range.

How the Human Eye Differs From a Camera

Speaking of resolution, one question that remains is: what is the difference between human eyes and cameras?

The three main diff

erences between human eyes and cameras are:

- How they focus on objects

- How they process different colors

- What they can see in a scene

Focus

Human eyes maintain focus on constantly moving objects by changing the shape and thickness of the inner lens through the contraction and relaxation of small muscles to accommodate the visual information being captured.

The mechanical parts of the camera lens also need to be adjusted to maintain focus on a moving object.

Processing Color Varieties

We have cones in our eyes, which are photoreceptors that form part of the structure of our retinas.

There are three types of cones which respond to different wavelengths of light. Red cones respond to long wavelengths, blue cones are sensitive to short wavelengths, and green cones are sensitive to medium wavelengths.

This combination allows the brain to activate combinations of colors.

Conversely, cameras have a type of photoreceptor that uses filters to respond to red, blue, and green light.

In the lens, the filters are distributed evenly across the object, while the cones are concentrated only in the retina of the human eye.

Retina vs. Sensor

The visual field of cameras and human vision can be different.

The main difference between the retina and a camera sensor is that the retina is curved because it’s a component of the eyeball. The sensor is flat.

In addition, our eyes have more cells than the number of pixels in a camera sensor. There are around 130 million cells, 6 million of which are color-sensitive.

The central part of the eye’s retina has the most cells. The pixel density of a camera sensor, on the other hand, is uniform.

Depending on the sensor size, you will either have a full field of view or a cropped one. A full-frame camera will deliver your field of view to the camera. An APS-C sensor, on the other hand, will have a crop factor.

However, human eyes have a blind spot where the optic nerve meets the retina. We often don’t perceive this spot because our brain completes the blind spot using what the other eye has captured when looking at the same image.

In addition, our eyes can move quickly and freely, constantly filling out our view.

Human vision’s dynamic range is substantially greater than that of a camera. We can see details in both low-light and bright-light conditions.

The camera cannot see as much contrast as the eye. To compensate, various techniques must be implemented to create photographs with wide contrast ranges.

But… If the Eye Were a Camera…

Both human vision and cameras have lenses and surfaces that are sensitive to light.The iris regulates the amount of light that enters the eye. The retina, a light-sensitive surface located at the back of the eye, sends impulses to the brain via the optic nerve. Finally, the brain interprets what you see.This process is similar to what happens when a camera takes a photograph.First, the light hits the surface of the camera lens. Next, the

Both human vision and cameras have lenses and surfaces that are sensitive to light.The iris regulates the amount of light that enters the eye. The retina, a light-sensitive surface located at the back of the eye, sends impulses to the brain via the optic nerve. Finally, the brain interprets what you see.This process is similar to what happens when a camera takes a photograph.First, the light hits the surface of the camera lens. Next, the

Both the eye and the camera lens are convex, so light refracts when it hits a convex object, turning the image upside down.

When viewing images or watching movies, you don’t notice that the image is upside down, and this is because the brain intervenes to transform what the eyes capture.

The same thing happens with DSLR cameras, which are also programmed to adjust automatically. They have a prism or mirror inside them, and because of that, the cameras record images right-side up.

Megapixel Equivalent

How many megapixels is the equivalent? As I said above, scientist and photographer Roger M. N Clark, in his research and based on his calculations, estimates that the eye has a capacity of 576 megapixels. That’s just considering a field of vision of 120 degrees.

The full angle of human vision would require even more megapixels. This kind of image detail requires a large format camera to record.

ISO (The Sensitivity of the Eye to Light)

According to his studies, Richard Blackwell states that human vision integrates up to about 15 seconds at low light. The ISO changes with the light level, increasing the rhodopsin in the retina.

Assuming you wear sunglasses and adapt well to the dark, you can see very faint stars far from the light pollution of the city. Based on this, it’s possible to make a reasonable estimate of how well we can see in low light.

In his tests, H. Richard Blackwell estimated that the adapted dark eye is approximately ISO 800.

During the day, the eye is much less sensitive – more than 600 times less, according to a study carried out in Toronto in 1958. That would put the ISO equivalent at around 1.

- More: What is ISO?

Dynamic Range of the Eye:

The range of human vision is greater than any film or digital camera. The eye is able to function in bright sunlight and see the dim light of stars.

Blackwell even shares some tests you can do to test it out. Here’s a simple experiment you can do:

- Go outside with a star map on a clear night sky with a full moon.

- Wait a few minutes for your eyes to adjust.

- Now, find the faintest stars you can detect when you can see the full moon in your field of vision.

- Try to limit the moon and stars to a distance of approximately 45 degrees upwards (the zenith).

If the sky is clear and away from city lights, you will probably be able to see stars of magnitude 3. The full moon has a stellar magnitude of -12.5.

If you can see stars of magnitude 2.5, the magnitude range you are seeing is 15.

Every 5 magnitudes is a factor of 100, so 15 is 100 * 100 * 100 = 1,000,000. So the dynamic range in this relatively low light condition is about 1 million to 1 (20 stops), maybe more!

- More: What is Dynamic Range?

Focal Length of the Eye:

What is the focal length of the eye? We always hear that the 50mm lens is the closest to human vision. But is it really?

By my own research, I found many results ranging from 17mm to 50mm. To get the correct answer, the study “Light, Color and Vision, Hunt et al., Chapman and Hall, Ltd, London, 1968, page 49” says that for a “standard European adult” the focal length from eye to object is 16.7 mm and the focal length of eye image is 22.3 mm.

FAQs on Human Eye Resolution

Can humans see in 16k?

Do our eyes see in 4K?

Yes, human eyes can see 4K and even 8K resolutions. However, it is difficult for human eyes to compare the two. Your eyes can perceive the difference between 8K and 4K resolutions, but only if you have high visual acuity or are extremely close to the screen.

Comments

Post a Comment